Machine Learning Hub

A dynamic platform for the Tapis Framework, designed to streamline the machine learning workflow for researchers utilizing TACC's HPC systems. The open-source project is under active development.

Web Application

Full-Stack

Technologies used

- OpenAPI v3

- Flask

- FastAPI

- Typescript

- React

- Docker

- Kubernetes

- Figma

- Hugging Face Hub API

- Tapis API

Team: Cloud & Interactive Computing at Texas Advanced Computing Center.

Machine learning plays a pivotal role in research by enabling the extraction of meaningful insights from vast and complex datasets, accelerating data analysis and pattern recognition. Its ability to uncover hidden relationships and trends empowers cross-disciplinary decision-making, predictive modeling, and new discoveries.

Nonetheless, users lacking technical proficiency may grapple with the complexities inherent in machine learning models, leading to challenges in both implementation and interpretation. This underscores the need for user-friendly interfaces and tools that can effectively bridge this gap.

Within the Cloud and Interactive Computing group (CIC) at the Texas Advanced Computing Center (TACC), we are actively developing a Machine Learning Hub Portal (ML Hub), that integrates seamlessly into the Tapis Framework. As a user-friendly platform, the ML Hub seeks to amplify the experiences of developers, scientists, and researchers engaged in machine learning research.

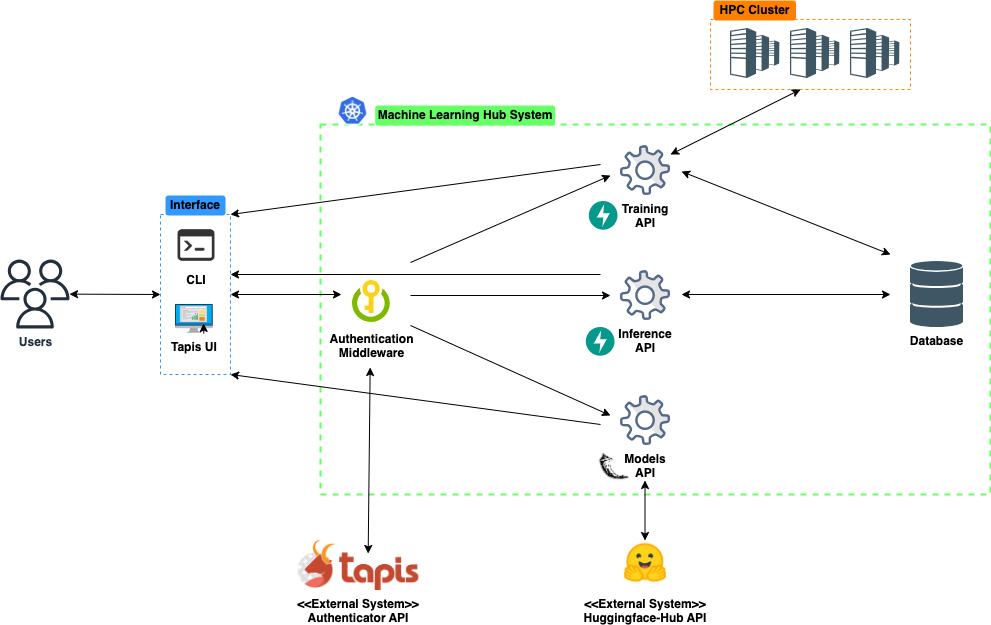

Designed as a set of user-friendly microservices, each service provides an independent REST API over HTTP, codified using OpenAPI v3 definitions, and implemented in Python’s Flask. By integrating Hugging Face's API into ML Hub, we aim to provide open-source pre-trained machine learning models, allowing developers to harness state-of-the-art AI capabilities. All service requests are authenticated using a JSON Web Token (JWT) from the Tapis Authenticator API.

Core features of ML Hub:

- Models Overview: The portal showcases a snapshot of top Hugging Face models, allowing users to filter by author and access detailed specifics, including availability of inference server.

- Models Download: Through the portal, users can download a specific model. The application fetches the latest version of the model in a version-aware manner, and generates download links for the model.

- Inference Client: The inference client enables users to spin up or spin down a server on TACC’s HPC systems for machine learning model inference, and display model results and metadata to user. This service is a fast way to get started, test different models, and prototype AI products.

- Training Engine: The ML Hub Portal provides users with the ability to fine-tune machine learning models with their dataset and submit jobs to TACC’s HPC systems. Users can quickly showcase their fine-tuned models without concerning themselves with the complexities of the underlying technologies.

The Machine Learning Hub Portal contributes to the broader discourse on democratizing machine learning's potential, by providing user-friendly access to state-of-the-art models and addressing non-technical users' challenges. We hope that this project will foster innovative collaboration and user engagement, paving the way for an inclusive and impactful future in machine learning research.

An illustration of the Machine Learning Hub architecture: